The LA Times recently faced intense backlash after its AI-powered tool generated a statement that appeared to defend the Ku Klux Klan (KKK). The AI, designed to provide opposing viewpoints on various topics, failed to contextualize the hate group’s history, leading to an alarming output that shocked readers and damaged the publication’s credibility.

This incident highlights the risks associated with using artificial intelligence in journalism without proper oversight. While AI can assist in generating content, it lacks the nuanced understanding of history and ethics that human editors provide. The failure of the LA Times’ AI tool underscores the dangers of unvetted automated responses, especially on sensitive historical and political issues.

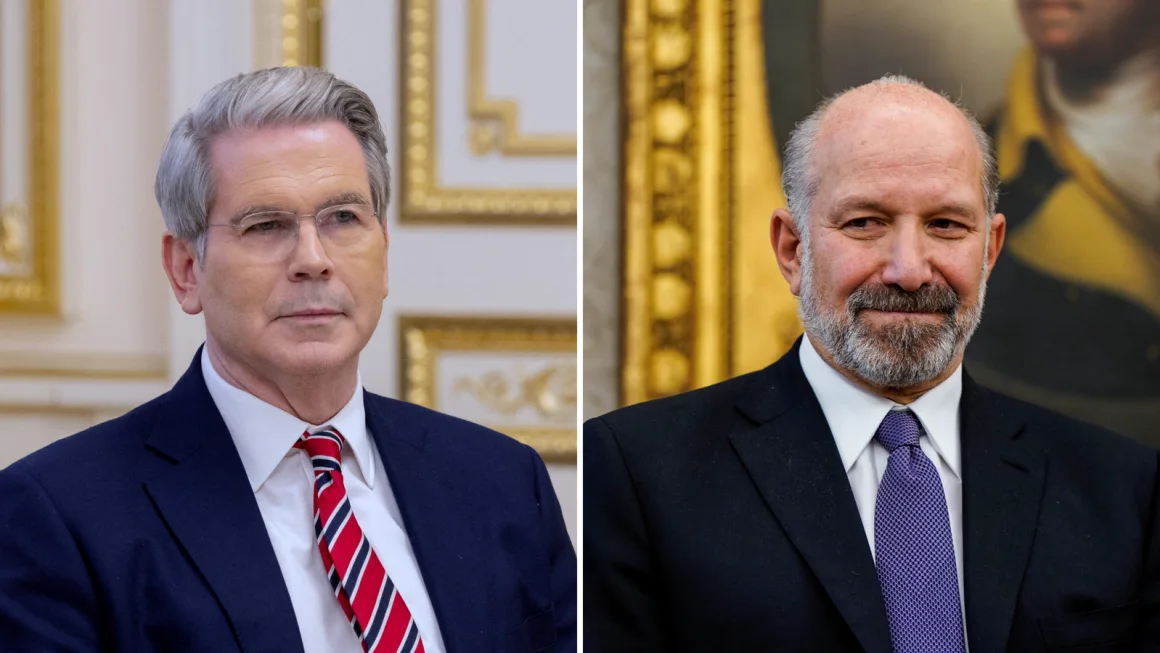

Moreover, the controversy raised concerns about leadership awareness, as the newspaper’s owner was reportedly unaware of the AI-generated statement until the issue had already escalated. This points to a broader problem of accountability in AI-driven journalism. Without stringent guidelines and human intervention, AI-generated content can lead to misinformation, public outrage, and reputational damage.

AI has the potential to enhance journalism, but it should be used as a supplement rather than a replacement for human editorial judgment. The LA Times’ blunder serves as a cautionary tale, reminding media organizations of the importance of responsible AI deployment, particularly when dealing with complex and historically significant subjects.